MSU Super-Resolution for Video Compression Benchmark 2022

Discover SR methods for compressed videos and choose

the best model to use with your codec

Key features of the Benchmark

- Diverse dataset

- H.264, H.265, H.266, AV1, AVS3 codec standards

- More than 260 test videos

- 6 different bitrates

- Various charts

- Visual comparison for more than 80 SR+codec pairs

- RD curves and bar charts for 5 objective metrics

- SR+codec pairs ranked by BSQ-rate

- Extensive report

- 80+ pages with different plots

- 15 SOTA SR methods and 6 objective metrics

- Extensive subjective comparison with 5300+ valid participants

- Powered by Subjectify.us

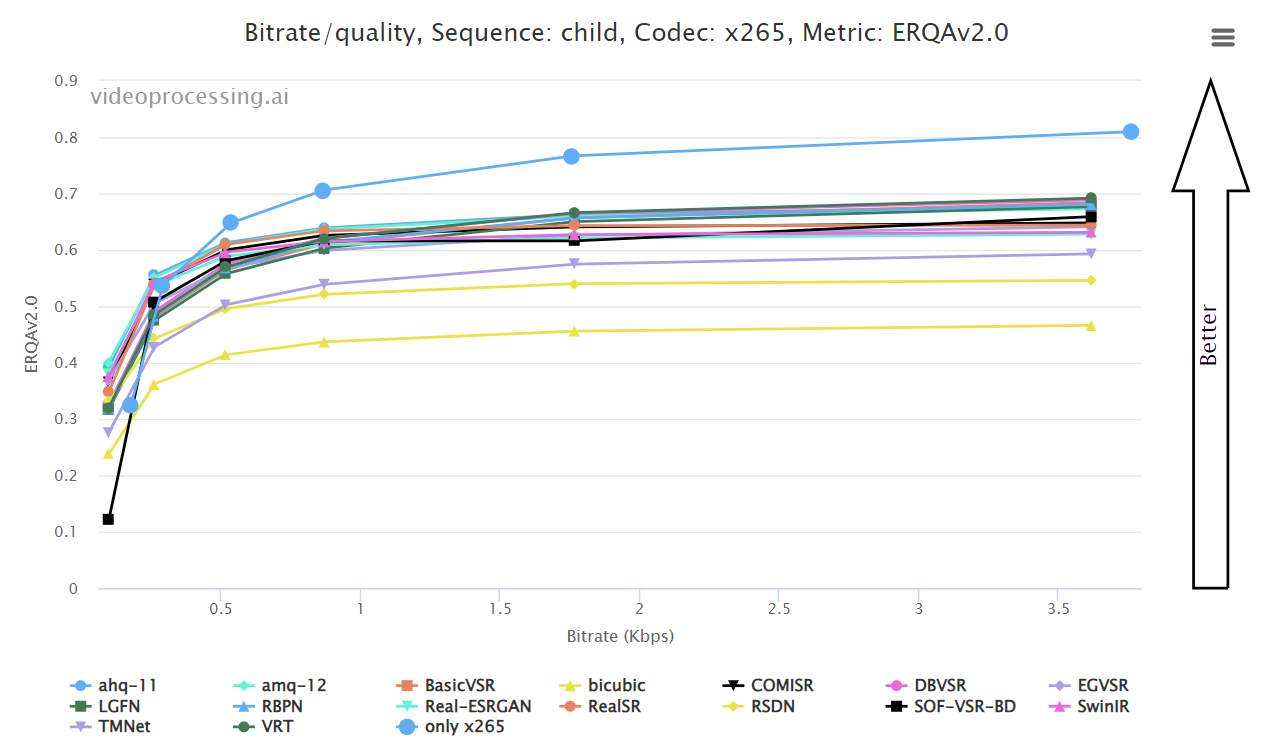

Charts

In this section, you can see RD curves, which show the bitrate/quality distribution of each SR+codec pair, and bar charts, which show the BSQ-rate calculated for objective metrics and subjective scores.

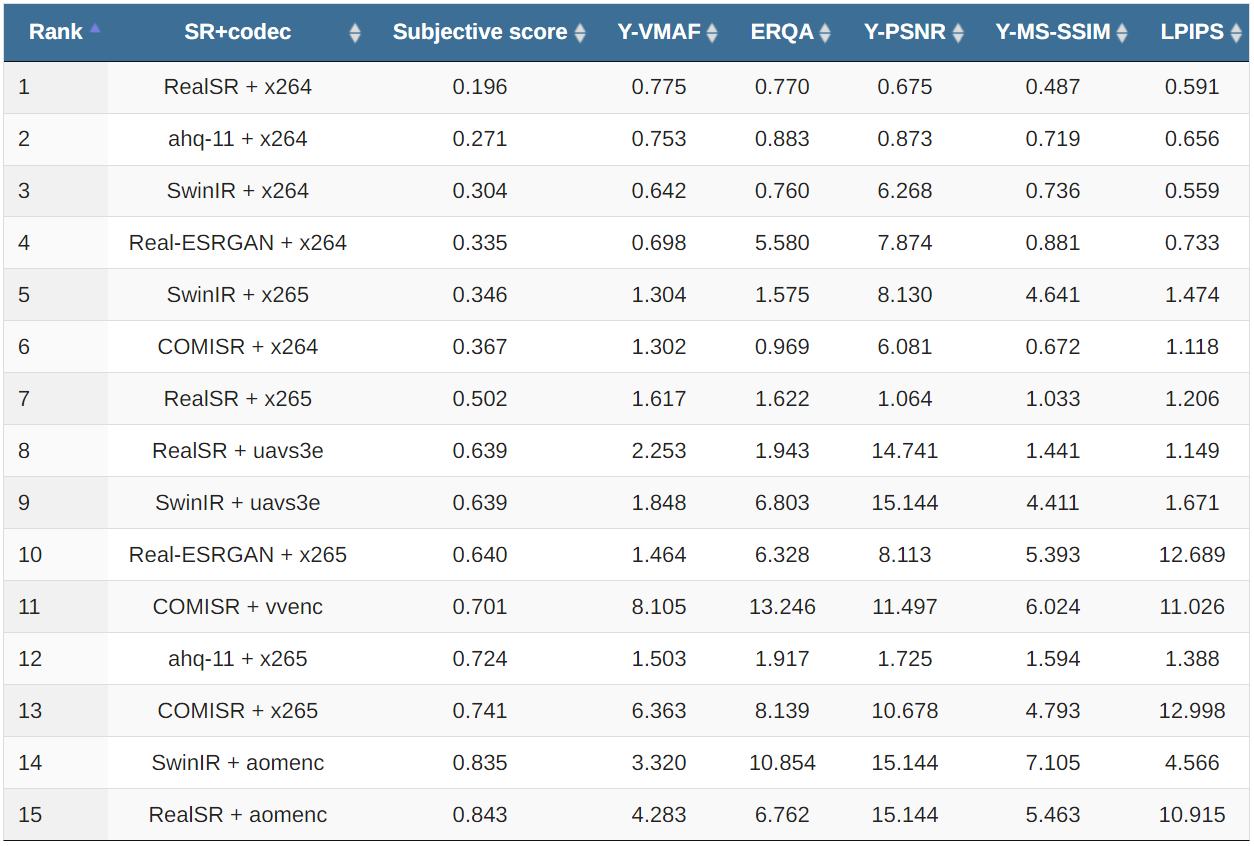

Leaderboard

The table below shows a comparison of all pairs of Super-Resolution algorithms and codecs. Each column shows BSQ-rate over a specific metric.

You can see the full leaderboard here.

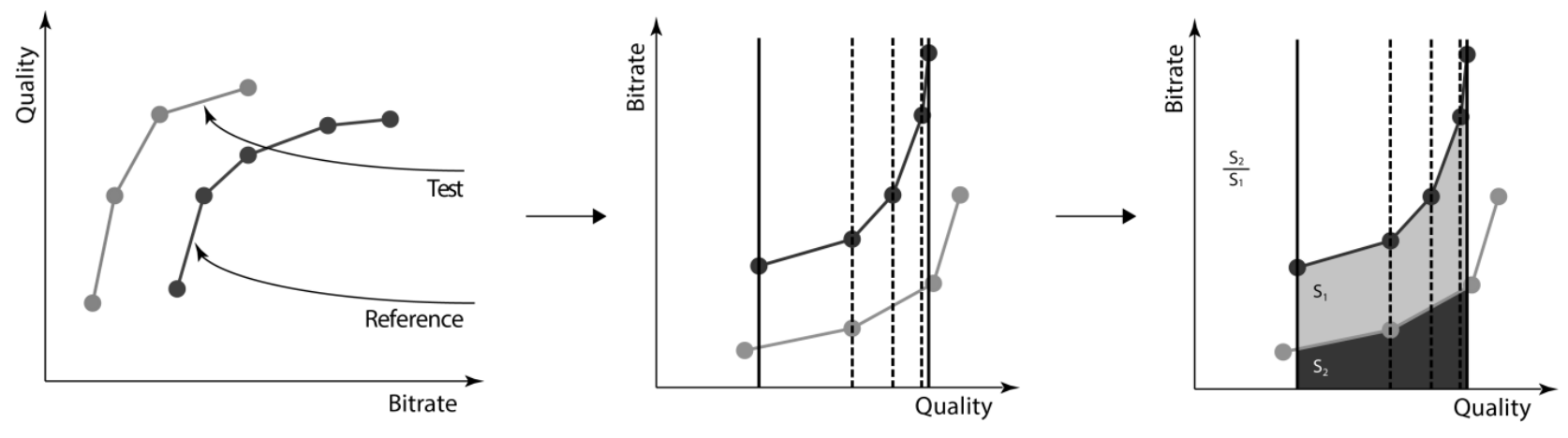

BSQ-rate

Learn about BSQ-rate calculation in the methodology of the benchmark.

Report

Your method submission

Verify your method’s ability to restore compressed videos and compare it with other algorithms.

- Download input data

- Download low-resolution input videos as sequences of frames in PNG format.

- Apply your algorithm

- Apply your Super-Resolution algorithm to upscale frames to 1920×1080 resolution.

- Send us result to sr-codecs-benchmark@videoprocessing.ai

If you would like to participate, read the full submission rules here.

Contacts

For questions and propositions, please contact us: sr-codecs-benchmark@videoprocessing.ai

Learn more

Written on April 22, 2022